Exploring the Intersection of AI and Nudity: Ethical Challenges and Innovations

Artificial Intelligence (AI) has made tremendous strides in recent years, nude ai transforming various aspects of our lives. From enhancing productivity in workplaces to personalizing our digital experiences, AI’s capabilities seem boundless. However, one area of concern that has emerged alongside these advancements is the use of AI in generating and manipulating images of nudity. This article delves into the ethical challenges and innovations surrounding AI and nudity, shedding light on the complexities and potential solutions.

The Rise of AI-Generated Content

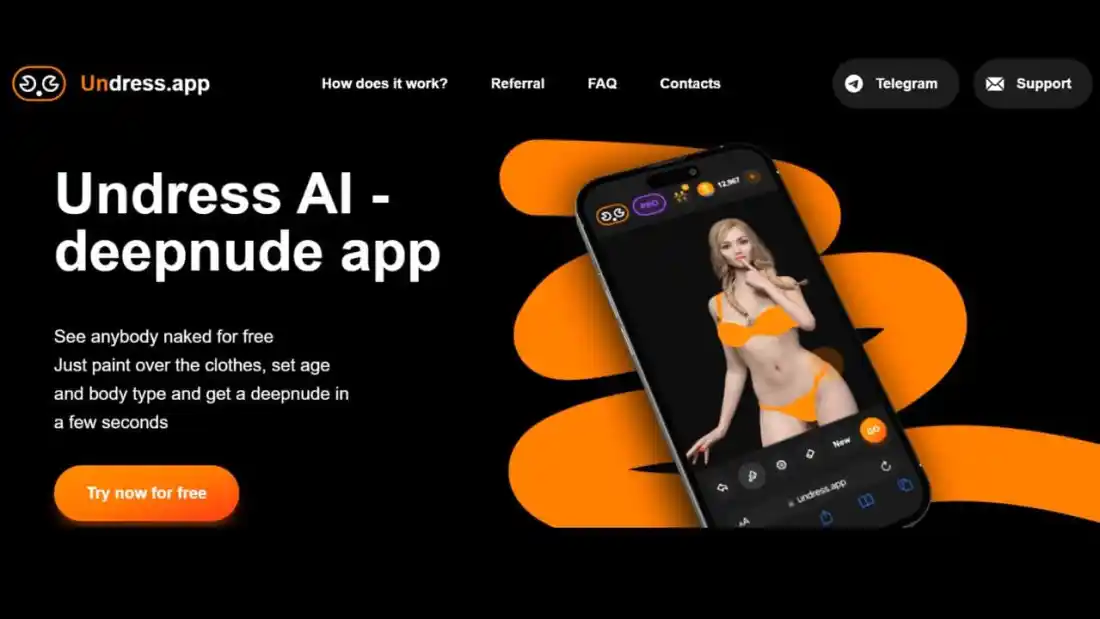

AI technologies, particularly generative models like Generative Adversarial Networks (GANs), have the ability to create highly realistic images and videos. While these advancements offer exciting opportunities for creativity and innovation, they also pose significant risks when it comes to generating explicit content. The potential for AI to create realistic images of nudity without consent has sparked intense debate about privacy, consent, and the responsible use of technology.

Ethical Concerns and Risks

One of the primary concerns with AI-generated nudity is the potential for misuse. Deepfakes and other AI-generated explicit content can be used maliciously to create non-consensual pornography or to defame individuals. The creation and distribution of such content without consent not only violate personal privacy but can also have serious psychological and reputational impacts on those affected.

Another ethical issue is the challenge of distinguishing between real and AI-generated content. As AI technology becomes more sophisticated, it becomes increasingly difficult to identify manipulated images and videos. This can lead to misinformation and the erosion of trust in digital media, making it harder to discern what is real and what is fabricated.

Addressing the Challenges

To address these challenges, several approaches are being explored:

- Legislation and Regulation: Governments and organizations are working on legal frameworks to address the misuse of AI in generating explicit content. Laws are being developed to criminalize the creation and distribution of non-consensual explicit content, and platforms are being encouraged to implement stricter policies and technologies to detect and prevent such content.

- Technological Solutions: Researchers are developing AI tools to detect deepfakes and other manipulated content. These tools use machine learning algorithms to identify inconsistencies and anomalies in images and videos, helping to distinguish between genuine and generated content.

- Ethical Guidelines: There is a growing call for ethical guidelines and best practices for AI developers and users. By establishing clear ethical standards, the tech community can work together to ensure that AI technologies are used responsibly and with respect for individuals’ rights and privacy.

- Public Awareness and Education: Increasing awareness about the potential risks of AI-generated content is crucial. Educating the public about the existence of deepfakes and the importance of verifying digital media can help mitigate the impact of malicious uses of AI.

Looking Forward

As AI technology continues to evolve, it is essential to strike a balance between innovation and ethics. While AI offers incredible possibilities for creativity and progress, it is equally important to address the risks and challenges associated with its misuse. By fostering collaboration between technologists, policymakers, and the public, we can work towards a future where AI is used responsibly and ethically, ensuring that it benefits society as a whole while respecting individual rights and privacy.